Case Study

Audio Event Detection & Labeling for AI Baby Monitor

Client

Retailer in baby products

Industry

Baby & Parenting Technology

Service Provided

Audio Data Annotation including

- Event Detection

- Labeling

- Quality Control

- Project Management

Timeline

July 2024 – Nov 2024

The Vision – AI Baby Monitor

Our client, who is a key player in the baby products space, had a clear mission of making parenting just a little easier and more intuitive. They wanted to go beyond the traditional baby monitor and build something truly intelligent. A smart device that could not only listen but also understand what’s happening around the baby.

Instead of simply alerting parents and childcare helpers when there’s noise, the goal was to design a monitor that could recognize specific sounds like a baby’s cry, coughing, changes in breathing, or even background cues like footsteps or environmental noises. These sounds, when interpreted correctly, could offer valuable insights into the baby’s comfort, needs, and safety. With timely alerts, this device could empower both parents and babysitters to respond with confidence even from another room.

To make this a reality, they needed a highly capable AI model trained on real-life sounds from the baby’s environment. That’s where our team came in. The device’s success depended on large volumes of audio data, clean and well-labeled. DeeLab’s task was to transform 100 hours of raw recordings into structured data that the AI could learn from. Every cry, babble, breath, and movement had to be identified and categorized with care. This wasn’t just about machine learning but supporting families with a little extra peace of mind.

Understanding the Challenges

The project presented several challenges, especially in terms of audio event detection and labeling. The baby monitor’s AI needed to be able to detect and categorize various events, from a baby crying or coughing to more ambient sounds like a parent speaking, movements, or even background noises such as dogs barking. While these sounds might seem easy to differentiate in a quiet environment, the reality was more complex.

Some sounds overlapped, and others were subtle or faint, making it difficult for an AI system to distinguish them. For example, a baby’s soft cry might be mistaken for other noises in the background if the audio data wasn’t labeled properly. Additionally, labeling audio data at scale required a balance between speed and accuracy, as the client’s timeline was tight, and they needed the data as quickly as possible to train their AI model.

Additionally, the dataset had to be diverse enough to cover a wide range of scenarios—different types of cries, varying environmental conditions (like a noisy room), and various interactions between the baby and their surroundings. It was important that every sound was labeled consistently so that the AI could learn to differentiate between them.

Our Approach

To tackle the challenges, we developed a streamlined process that combined precision with efficiency. Here’s how we broke down the project:

1. Organizing and Preparing the Audio Data

The first step was to organize the audio data. The client had already recorded a large volume of sound clips, but the data wasn’t categorized in a way that would make labeling easy. Our team helped to structure the data into categories such as “Baby Cry,” “Baby Coos,” “Parent Talking,” “Ambient Noise,” and other relevant sounds. This made it easier for us to quickly identify what each audio clip represented and label it accordingly.

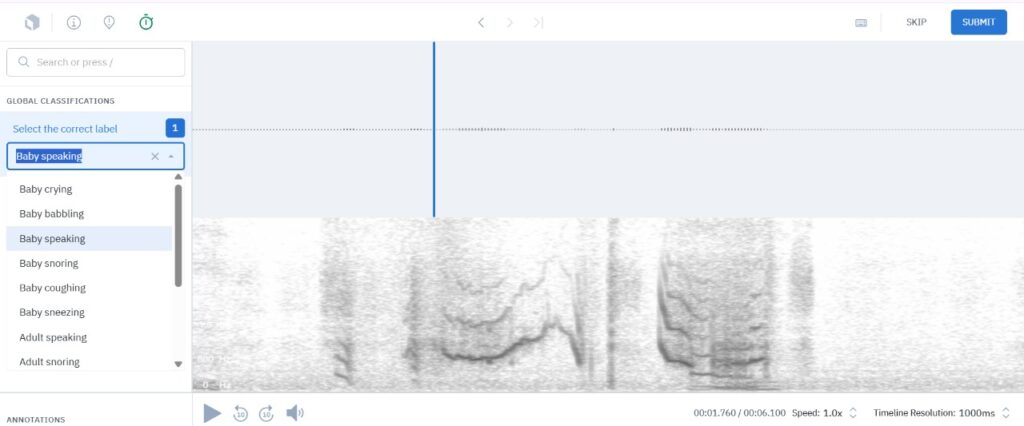

2. Labeling and Event Detection

With the data organized, we began the labeling process. We listened to each audio clip, identifying and marking specific events. For instance, a crying baby would be labeled as “Baby Cry,” while a parent speaking to the baby would be labeled as “Parent Speaking.” This wasn’t always straightforward—sometimes, a baby’s cry might be cut off by another sound, or the environment might be noisy, making it harder to identify the right event.

To ensure the accuracy of the labels, we carefully reviewed all of them manually. At certain points, the process was quite time-consuming, especially when dealing with more detailed or nuanced cases. However, this thorough approach allowed us to maintain a high standard of quality throughout the project. In the end, the extra time and attention paid off, resulting in reliable and consistent labels that we are confident in.

3. Quality Control and Consistency

The quality control process was crucial to the success of the project. Since the AI model would only be as good as the data it was trained on, it was essential that the labels were consistent and error-free. We implemented a two-step review process to catch any mistakes:

First Review: Before submitting their work for quality checks, labelers were responsible for reviewing and verifying their own labels. They followed internal guidelines to ensure that every sound was labeled according to a standardized set of rules.

Second Review: After the labelers’ self-review, a dedicated quality control team conducted a second, independent review. They carefully checked the labels for accuracy and consistency. Any doubts or uncertainties were flagged for further discussion and clarification.

This thorough two-step review process helped ensure the quality and reliability of the labeled data, making it suitable for training the AI model.

4. Collaboration with the Client

Throughout the project, we maintained close communication with the client to ensure that the labeled data met their specific needs. We regularly checked in to discuss any issues or adjustments to the sound categories, ensuring that the labels aligned with the client’s vision for the product. The collaborative nature of the project helped us stay on track and make quick adjustments when necessary.

Audio Labeling

Audio labeling involves annotating or transcribing audio data to make it usable for machine learning applications. From speech recognition and NLP to audio classification and sentiment analysis, we cover a wide range of audio annotation tasks, ensuring your data is accurately labeled for optimal performance.

The Results

Once the audio data was labeled, the client was able to feed it into their AI model, which improved the monitor’s ability to detect and classify different sounds. The AI now recognized not just basic sounds like crying but also more complex events such as a baby’s breath, a parent’s voice, or the sound of a baby moving around.

Accurate AI Performance: The AI model was able to accurately identify and differentiate between a wide variety of sounds, ensuring that the monitor provided the right alerts to parents. Whether the baby was crying, cooing, or simply playing, the monitor was able to provide real-time, reliable feedback.

Faster Time-to-Market: By streamlining the labeling process and working in close collaboration with the client, we helped them accelerate the development timeline and bring their product to market faster. The client was able to launch the AI-powered baby monitor ahead of schedule, giving them a competitive edge in the industry.

Scalability for Future Updates: With the system in place, the client now has a scalable process for labeling future audio data. As they continue to improve the AI model and expand the range of sounds it can detect, they can continue to rely on our efficient labeling process to keep the system up-to-date.

What We Learned

This project highlighted the critical role of high-quality labeled data in training reliable AI systems. By delivering accurately annotated and well-structured audio data, our team helped lay the foundation for a smarter baby monitor — one designed to better recognize real-life baby sounds and support the needs of modern parents.

It also offered valuable insights into the challenges of labeling complex, overlapping audio events at scale. From soft cries to background interactions, every detail mattered in helping the AI learn to differentiate meaningful sounds from ambient noise.

We’re proud of the contribution we made and the part data annotation plays in shaping thoughtful, human-centered AI products that make a difference in everyday life.

Project in Numbers

Total workload: 436 hrs, 31 min

- Total amount of labeled audio files: 34,489

- Total length of audio: 101 hrs 37 min

- The amount of audio annotation experts from DeeLab: 4