Artificial intelligence runs on data. Every big leap forward has come from training models with huge amounts of information. Gathering real-world data isn’t always simple, it can be costly, slow, and sometimes blocked by privacy concerns. Researchers are turning to synthetic data rather than collected and still enough to train AI systems.

Nothing New With Synthetic Alternatives

Industries have long relied on synthetic alternatives. Textiles blend cotton with polyester, fuels are refined from bio-sources or engineered in labs, and food products use substitutes to improve shelf life or reduce cost. These innovations highlight how synthetic options emerge when natural resources are scarce, expensive, or insufficient to meet demand.

A similar shift is now visible in artificial intelligence. As models require ever-larger datasets, synthetic data is being developed to supplement or replace real-world information.

Synthetic Data

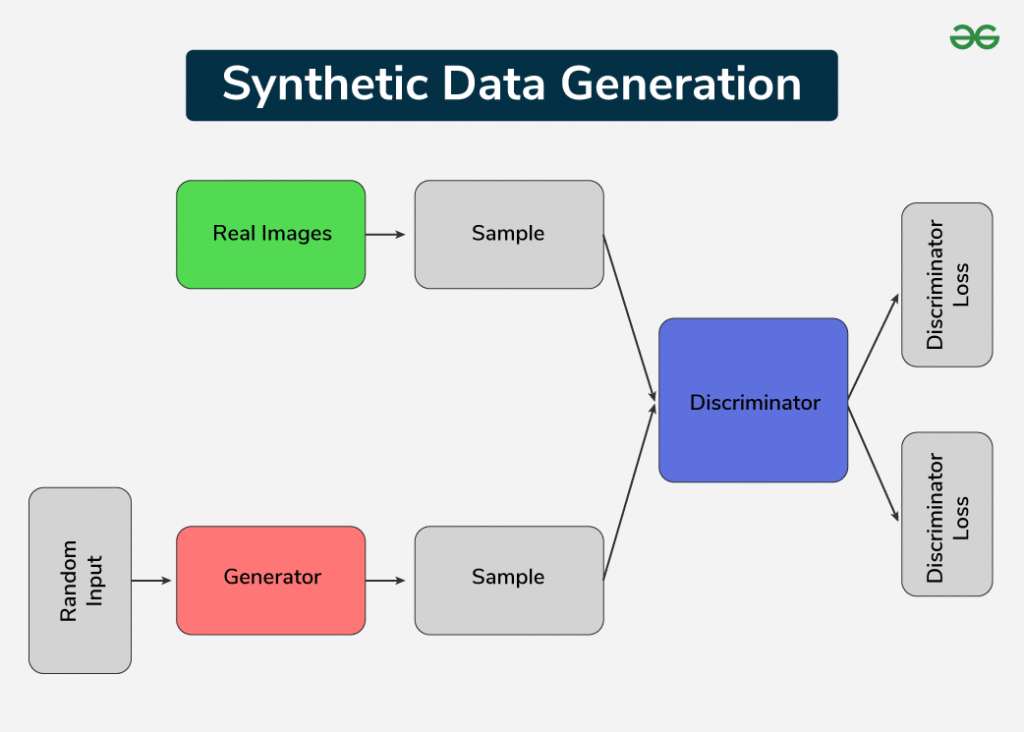

Synthetic data is information created artificially rather than collected from real-world events or people. Engineers use tools like computer simulations, statistical models, or generative AI to build datasets that resemble real ones.

A report by NVIDIA shows how autonomous vehicle software can process simulated data just like it would real sensor input from a car on the road. Developers can create virtual driving scenarios, and the AI models respond as though they were behind the wheel of an actual vehicle. This approach gives self-driving systems a safe way to learn from rare situations before ever encountering them in real traffic.

Waymo has built an entire simulation platform, SimulationCity to generate synthetic driving scenarios for its self-driving cars. The system can create complete journeys, from short urban trips to long delivery routes, with changing traffic, weather, and lighting conditions. The Waymo Driver processes this synthetic data as if it were on real roads, allowing it to safely learn from countless edge cases before encountering them in the physical world.

The Advantages of Synthetic Data

Cost Savings

Synthetic data can be produced with relatively low resources once the generation tools are in place. This makes it more economical for projects that need large datasets without significant recurring expenses.

Privacy Protection

Artificially generated data is not tied to real individuals, which reduces the risk of exposing sensitive information. It provides a safer way to work with realistic datasets in areas where privacy matters most.

Scalability

The generation process of synthetic data can be scaled up or down depending on project needs. If a less dataset or a massive one is required, synthetic data can be produced flexibly without hitting practical limits.

Rare Event Simulation

Uncommon scenarios can be generated deliberately with synthetic data. This is useful when training AI to handle unusual or extreme situations that might not appear often in ordinary datasets.

Customizability

Generating artificial data can be tailored to match specific project requirements, such as particular environments, conditions, or user behaviors.

Balance and Bias Reduction

Synthetic data offers a way to create datasets that are more balanced. Developers can include scenarios or groups that are often underrepresented, helping reduce bias in AI training.

Speed

Because it is digitally created, data can be generated quickly in the exact quantities needed, allowing AI teams to work faster and explore ideas without delays.

The Challenges of Synthetic Data

Too Perfect

Synthetic data is that it can be “too perfect”; real-world data is messy, full of outliers, errors, and unexpected noise, and these imperfections often teach AI how to deal with reality. If synthetic datasets are overly polished, models may look accurate in testing but struggle when faced with real-life unpredictability. This gap between the lab and the real world is a key risk developers must manage.

Hidden Bias

Although synthetic data can reduce bias by filling in gaps, it can also create new problems. The rules, assumptions, or simulations used to generate data may themselves carry hidden bias. If the source model overlooks certain groups or scenarios, the synthetic dataset will reflect that blind spot. In the end, the AI models becomes limited by the perspective of the system that generated its data.

Validation Challenges

Validating synthetic data is not always straightforward. Developers need to prove that the artificial examples reflect real-world behavior closely enough for AI training. Without benchmarking against actual datasets, there’s a risk that models will learn patterns that don’t exist outside the lab. This makes strong validation processes just as important as the data itself.

Limited Context

Some aspects of reality are incredibly difficult to recreate artificially. Human emotions, cultural complexities, or unpredictable environments often carry subtleties that can’t be fully captured by a simulation. Synthetic data may represent the “what happened,” but not always the “why it happened.” Without this deeper context, AI may struggle to handle complex real-world tasks.

Regulatory Uncertainty

Because synthetic data is still an emerging field, rules and standards are not yet consistent worldwide. Some industries, especially healthcare and finance, face strict compliance requirements that haven’t fully caught up to synthetic methods. This regulatory grey area can make organizations cautious about adopting synthetic data at scale. Until clearer guidelines are in place, its use will sometimes be limited by legal and ethical concerns.